INSIGHTS

Battling to a Draw:

Defense Expert Rebuttal Can Neutralize Prosecution Fingerprint Evidence

OVERVIEW

While all forensic science disciplines pose some risk of error, the public typically believes that testimony from fingerprint experts is infallible. By employing rebuttal experts who can educate jurors about the risk of errors or provide opposing evidence, courts can counter this tendency. CSAFE funded researchers conducted a survey to study the effect of rebuttal experts on jurors’ perceptions.

Lead Researchers

Gregory Mitchell

Brandon L. Garrett

Journal

Applied Cognitive Psychology

Publication Date

4 April 2021

Publication Number

IN 118 IMPL

Goals

1

Determine if a rebuttal expert’s testimony can affect jurors’ beliefs in the reliability of fingerprint evidence.

2

Examine the responses of jurors with different levels of concern about false acquittals versus false convictions.

The Study

Participants completed a survey which included questions regarding their concerns about false convictions or false acquittals.

The participants were then assigned to random mock trial conditions:

Control condition with no fingerprint evidence

Fingerprint expert testimony with no rebuttal

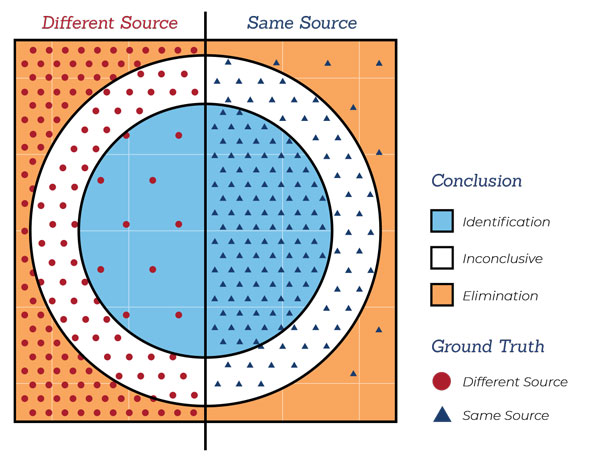

A methodological rebuttal: the expert focuses on the subjective nature of fingerprint analysis as a whole

An “inconclusive” rebuttal: the expert opines their own comparison was inconclusive due to the poor quality of the evidence

An “exclusion” rebuttal: the expert states that their own comparison shows the defendant could not have been the source of the fingerprints

Results

Trial Condition

% Voting for Conviction

- Every group that heard fingerprint expert testimony from the prosecution had a higher percentage vote for conviction than the control.

- 76% voted for conviction after hearing only the prosecution’s expert. The methodological rebuttal brought this down to 58%, while the inconclusive rebuttal had 38% and the exclusion rebuttal only 32% voting to convict.

Trial Error Aversions

Mean Likelihood D Committed Robbery

- Only the control group had a greater number of participants more concerned with false convictions than false acquittals.

- Those with strong aversions to false acquittals were less likely to side with the rebuttal expert, except in the case of an exclusion rebuttal.

Focus on the future

While exclusion and inconclusive rebuttals provided the best results for the defense, the methodological rebuttal still significantly impacted the jurors’ views on fingerprint evidence.

Traditional cross-examination seems to have mixed results with forensic experts. This implies that a rebuttal testimony can be more effective and reliable, while producing long-term changes in jurors’ attitudes.

While a rebuttal expert’s testimony can be powerful, much of that power depends on the individual jurors’ personal aversions to trial errors. This could be an important consideration for jury selection in the future.