CSAFE invites researchers, collaborators, and members of the broader forensics and statistics communities to participate in our Spring 2022 Webinar Series on Friday, April 22, 2022, from 11:00am-Noon CST. The presentation will be “Shining a Light on Black Box Studies.”

Presenters:

Dr. Kori Khan

Assistant Professor – Iowa State University

Dr. Alicia Carriquiry

Director – CSAFE

Presentation Description:

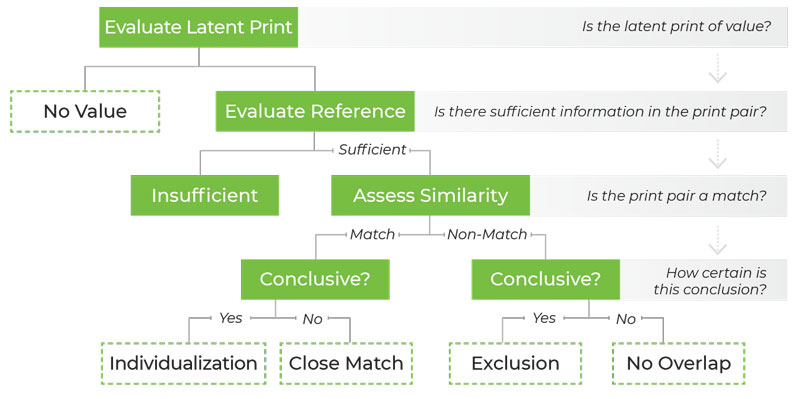

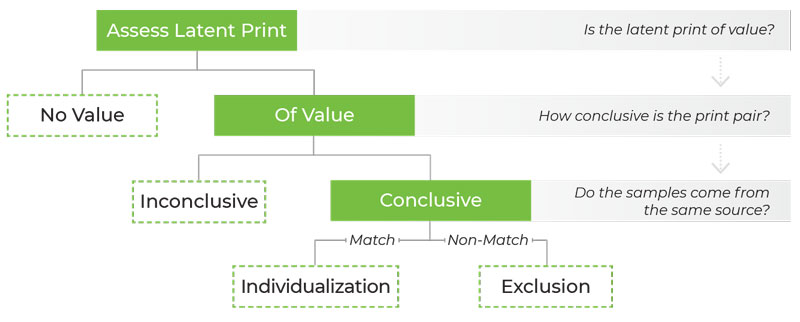

The American criminal justice system heavily relies on conclusions reached by the forensic science community. In the last ten years, there has been an increased interest in assessing the validity of the methods used to reach such conclusions. For pattern comparison disciplines, however, this can be difficult because the methods employed rely on visual examinations and subjective determinations. Recently, “black box studies” have been put forward as the gold standard for estimating the rate of errors a discipline makes to assist judges in assessing the validity and admissibility of the analysis of forensic evidence. These studies have since been conducted for various disciplines and their findings are used by judges across the country to justify the admission of forensic evidence testimony. These black box studies suffer from flawed experimental designs and inappropriate statistical analyses. We show that these limitations likely underestimate error rates and preclude researchers from making conclusions about a discipline’s error rates. With a view to future studies, we propose minimal statistical criteria for black box studies and describe some of the data that need to be available to plan and implement such studies.

The webinars are free and open to the public, but researchers, collaborators and members of the broader forensics and statistics communities are encouraged to attend. Each 60-minute webinar will allow for discussion and questions.

Sign up on the form below (Chrome & Safari web browsers work the best):