A new study assessing the accuracy and reproducibility of practicing bloodstain pattern analysts’ conclusions will be the focus of an upcoming Center for Statistics and Applications in Forensic Evidence (CSAFE) webinar.

The webinar Bloodstain Pattern Analysis Black Box Study will be held Thursday, Oct. 14 from 11 a.m.–noon CDT. It is free and open to the public.

During the webinar, Austin Hicklin, director at the Forensic Science Group; Paul Kish, a forensic consultant; and Kevin Winer, director at the Kansas City Police Crime Laboratory, will discuss their recently published article, Accuracy and Reproducibility of Conclusions by Forensic Bloodstain Pattern Analysts. The article was published in the August issue of Forensic Science International.

From the Abstract:

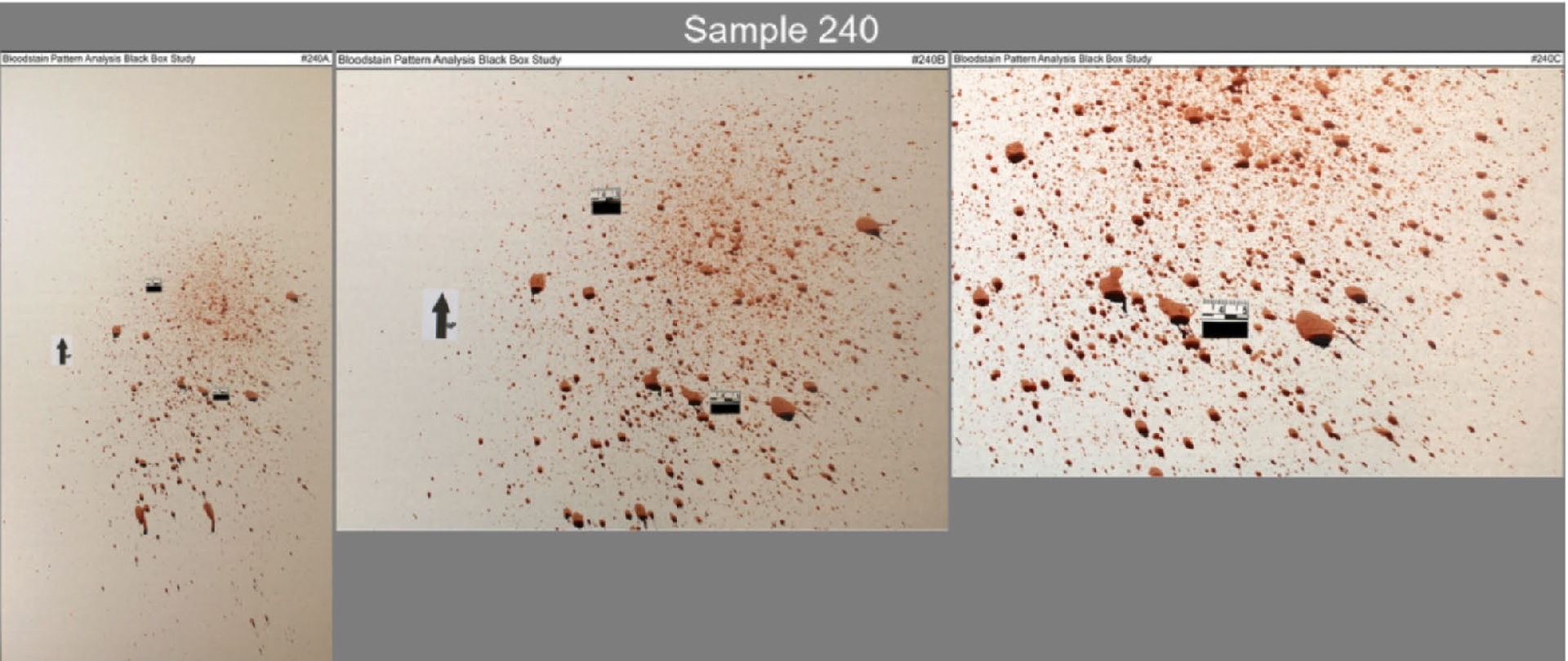

Although the analysis of bloodstain pattern evidence left at crime scenes relies on the expert opinions of bloodstain pattern analysts, the accuracy and reproducibility of these conclusions have never been rigorously evaluated at a large scale. We investigated conclusions made by 75 practicing bloodstain pattern analysts on 192 bloodstain patterns selected to be broadly representative of operational casework, resulting in 33,005 responses to prompts and 1760 short text responses. Our results show that conclusions were often erroneous and often contradicted other analysts. On samples with known causes, 11.2% of responses were erroneous. The results show limited reproducibility of conclusions: 7.8% of responses contradicted other analysts. The disagreements with respect to the meaning and usage of BPA terminology and classifications suggest a need for improved standards. Both semantic differences and contradictory interpretations contributed to errors and disagreements, which could have serious implications if they occurred in casework.

The study was supported by a grant from the U.S. National Institute of Justice. Kish and Winer are members of CSAFE’s Research and Technology Transfer Advisory Board.

To register for the October webinar, visit https://forensicstats.org/events.

The CSAFE Fall 2021 Webinar Series is sponsored by the National Institute of Standards and Technology (NIST) through cooperative agreement 70NANB20H019.

CSAFE researchers are also undertaking projects to develop objective analytic approaches to enhance the practice of bloodstain pattern analysis. Learn more about CSAFE’s BPA projects at forensicstats.org/blood-pattern-analysis.