Researchers from the Center for Statistics and Applications in Forensic Evidence (CSAFE) test the performance of a quantitative method for comparing shoeprint images.

The study, published in Forensic Science International, was led by Alicia Carriquiry, CSAFE director and Distinguished Professor and President’s Chair in Statistics at Iowa State University, and Soyoung Park, assistant professor of statistics at Pusan National University.

Footwear examiners currently rely on training and expertise to visually assess the similarity between two or more footwear impressions. Shoeprints found at the scene of a crime can often be partially observed or smudgy, which makes it challenging to compare prints to a reference image. Reliable, quantitative methods have yet to be validated for use in real cases, said Carriquiry, corresponding author of the study.

Carriquiry said that while some promising algorithms have been proposed to carry out the comparison, testing the performance of these algorithms has focused on mostly high-quality images of crime-scene impressions.

“For algorithmic tools to be useful for footwear examiners, they must be shown to perform well even when one of the images in the comparison is degraded or partially observed,” she said.

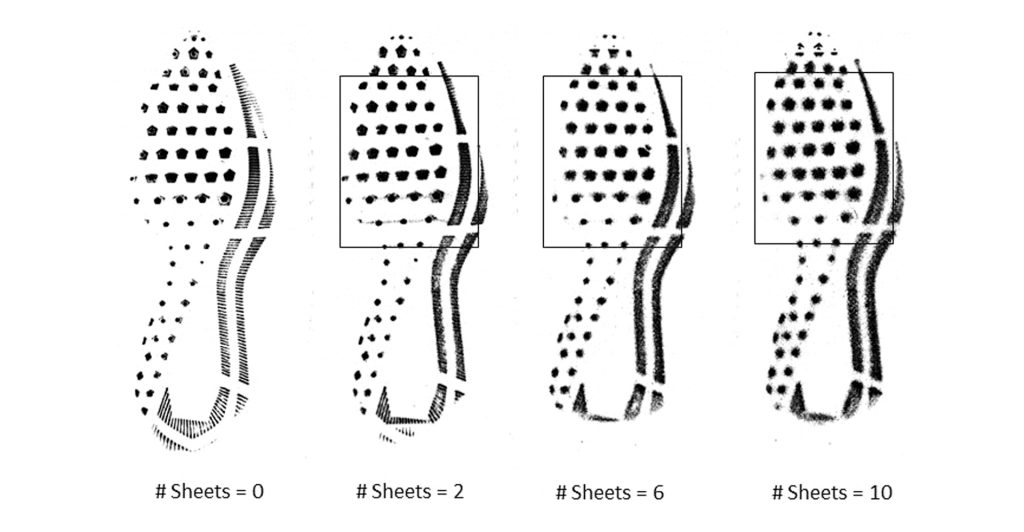

Carriquiry and Park created a dataset of impression images using 24 pairs of Nike shoes worn by volunteers for six months. The shoes were scanned, placing 0 to 10 sheets of paper between the shoes and the scanner to simulate levels of degradation. In addition, they deleted about half of each degraded image, to mimic a partial impression. They obtained 864 reference images and constructed 1,728 pairs of comparisons, half of which were mated (coming from the same shoe) and half non-mated.

They tested the robustness of the MC-COMP algorithm (Park and Carriquiry, 2020, Statistical Analysis and Data Mining) when it is applied to different image descriptors, which identify distinct groups of pixels in an image, such as corners, lines or blobs. Carriquiry and Park ran their comparisons using six different combinations of descriptors to determine which model had the best balance of accuracy and computation efficiency.

Overall, their findings were encouraging, and all tested models showed promise. When both images being compared were of good quality, it was possible to reach an accuracy of about 95 percent. When one of the two images was blurred, the algorithm did lose discriminating power, but the accuracy was still about 85 to 88 percent.

Carriquiry said there is still much work and experimentation to carry out before an algorithm could be used in real casework. A major roadblock to this type of research is the need for large databases with realistic footwear impressions.

Carriquiry said that CSAFE is constructing a more extensive database that will eventually be publicly available to aid other researchers working in this area. She said that as more databases are published, it may be possible to refine and adapt algorithmic approaches to quantitatively assess the similarity between two footwear outsole images.

However, Carriquiry and Park note that while algorithms will not replace well-trained examiners, accurate and reliable methods will become useful tools to aid examiners in their work.

Download the journal article and read the insights at https://forensicstats.org/blog/2022/03/01/insights-the-effect-of-image-descriptors-on-the-performance-of-classifiers-of-footwear-outsole-image-pairs/.

Learn more about Shoeprintr, an R package developed by CSAFE that was used to do the calculations described in the journal article at https://github.com/CSAFE-ISU/shoeprintr.

Read more about CSAFE’s previous work on developing an algorithm to compare footwear outsole images at https://forensicstats.org/blog/2020/02/28/insights-an-algorithm-to-compare-two-dimensional-footwear-outsole-images-using-maximum-cliques-and-speeded-up-robust-features/.