INSIGHTS

Mt. Everest—

We Are Going to Lose Many:

A Survey of Fingerprint Examiners’ Attitudes Towards Probabilistic Reporting

OVERVIEW

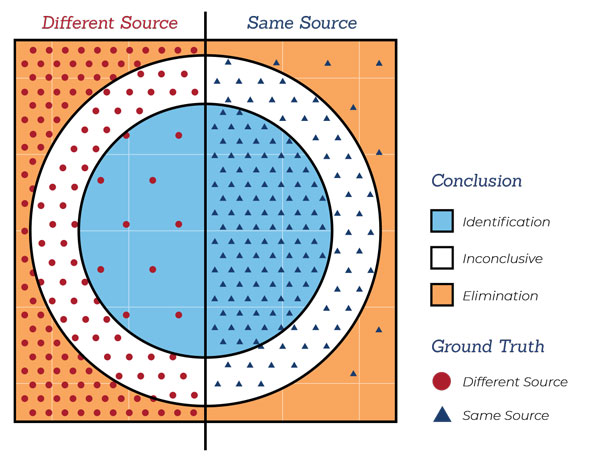

Traditionally, forensic examiners tend to use categorical language in their reports, presenting evidence in broad terms such as “identification” or “exclusion.” There have been efforts in recent years to promote the use of more probabilistic language, but many examiners have expressed concerns about the proposed change.

Researchers funded by CSAFE surveyed fingerprint examiners to better understand how examiners feel about probabilistic reporting and to identify obstacles impeding its adoption.

Lead Researchers

H. Swofford

S. Cole

V. King

Journal

Law, Probability, and Risk

Publication Date

7 April 2021

Publication Number

IN 120 IMPL

Goals

1

Learn what kind of language forensic examiners currently use when reporting evidence.

2

Gauge attitudes toward probabilistic reporting and the reasoning behind those attitudes.

3

Explore examiners’ understanding of probabilistic reporting.

The Study

- 301 friction ridge fingerprint examiners participated in a multiple-part survey.

- The survey polled how participants currently report their results: categorically or probabilistically.

- The survey also asked participants’ opinion on probabilistic reporting, whether they believed it was an appropriate direction for their community to take, and the reasoning behind their opinions.

- Finally, the survey asked participants to describe probabilistic reporting in their own words.

Results

probabilistic language was

not an appropriate direction

for the field.

- The most common concern was that “weaker,” more uncertain terms could be misunderstood by jurors or used by defense attorneys to “undersell” the strength of their findings.

- Another concern was that a viable probabilistic model was not ready for use in a field as subjective as friction ridge analysis –– and may not even be possible.

- While many felt that probabilistic language may be more accurate –– they preferred categorical terms as “stronger” –– and more in line with over a century of institutional norms.

Focus on the future

The views of the participants were not a handful of outdated “myths” that need to be debunked, but a wide and varied array of strongly held beliefs. Many practitioners are concerned about “consumption” issues –– how lawyers, judges, and juries will understand the evidence –– that are arguably outside their role as forensic scientists.

While many participants expressed interest in probabilistic reporting, they also felt they were not properly trained to understand probabilities since it has never been a formal requirement. Additional education and resources could help examiners more confidently adopt the practice.