On April 22, the Center for Statistics and Applications in Forensic Evidence (CSAFE) hosted the webinar, Shining a Light on Black Box Studies. It was presented by Dr. Kori Khan, an assistant professor in the department of statistics at Iowa State University, and Dr. Alicia Carriquiry,

CSAFE director and Distinguished Professor and President’s Chair in Statistics at Iowa State.

In the webinar, Khan and Carriquiry used two case studies—the Ames II ballistics study and a palmar prints study by Heidi Eldridge, Marco De Donno, and Christophe Champod (referred to in the webinar as the EDC study)—to illustrate the common problems of examiner representation and high levels of non-response (also called missingness) in Black Box studies, as well as recommendations for addressing these issues in the future.

If you did not attend the webinar live, the recording is available at https://forensicstats.org/blog/portfolio/shining-a-light-on-black-box-studies/

What is Foundational Validity?

To start to understand Black Box studies, we must first establish foundational validity. The 2016 PCAST report brought Black Box studies into focus and defined them to be a thing of interest. The report detailed that in order for these feature comparison types of disciplines, we need to establish foundational validity, which means that empirical studies must show that, with known probability:

- An examiner obtains correct results for true positives and true negatives.

- An examiner obtains the same results when analyzing samples from the same types of sources.

- Different examiners arrive at the same conclusions.

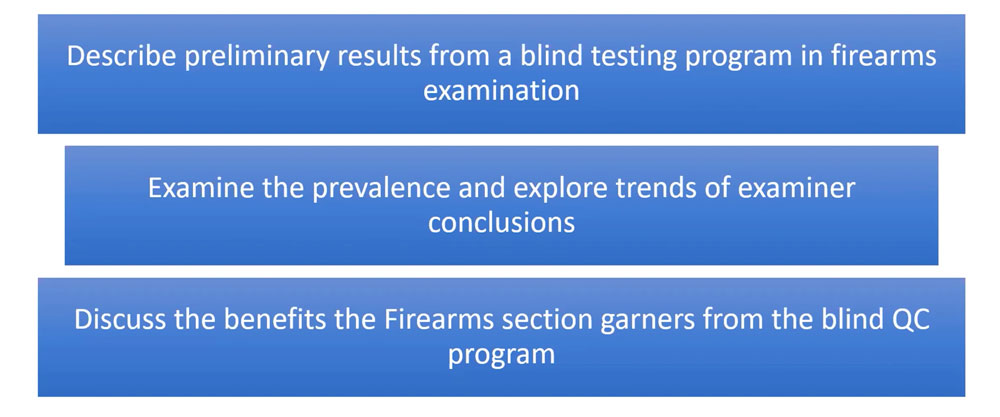

What is a Black Box Study?

The PCAST report proposed that the only way to establish foundational validity for feature comparison methods that rely on some amount of objective determination is through multiple, independent Black Box studies. In these studies, the examiner is supposed to be considered a “Black Box,” meaning there is some amount of subjective determination.

Method: Examiners are given test sets and samples and asked to render opinions about what their conclusion would have been if this was actual casework. Examiners are not asked about how they arrive at these conclusions. Data is collected and analyzed to establish accuracy. In a later phase, participants are given more data and their responses are again collected and then measured for repeatability and reproducibility.

Goal: The goal with Black Box studies is to analyze how well the examiners perform in providing accurate results. Therefore, in these studies, it is essential that ground truth be known with certainty.

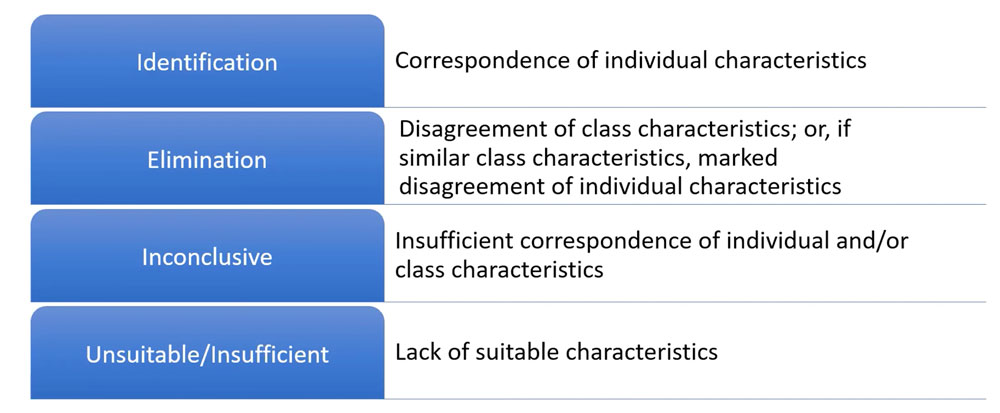

What are the common types of measures in Black Box studies?

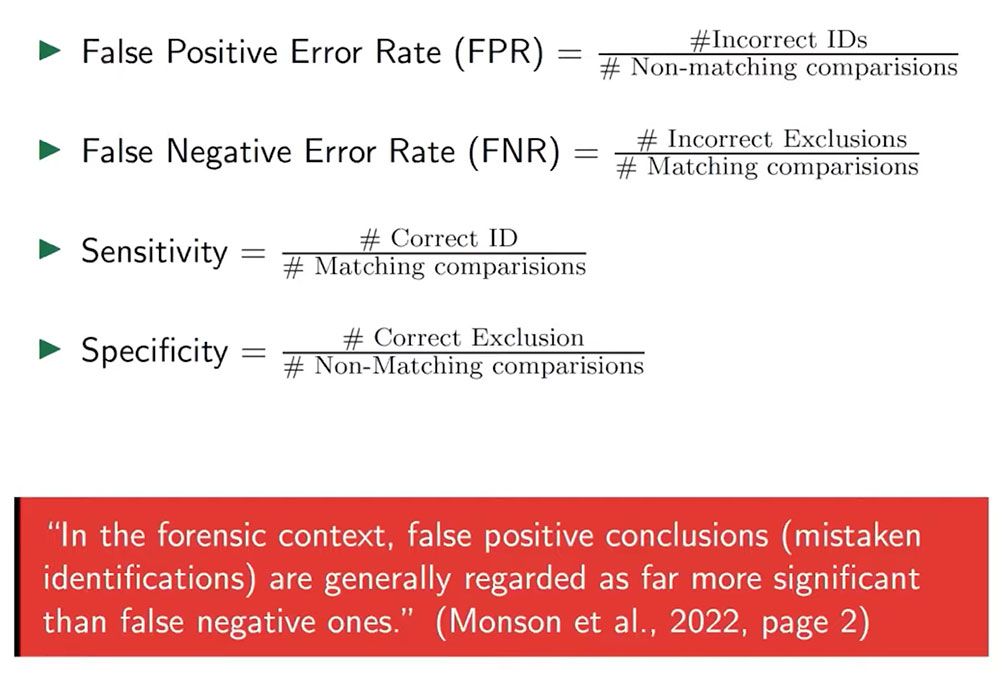

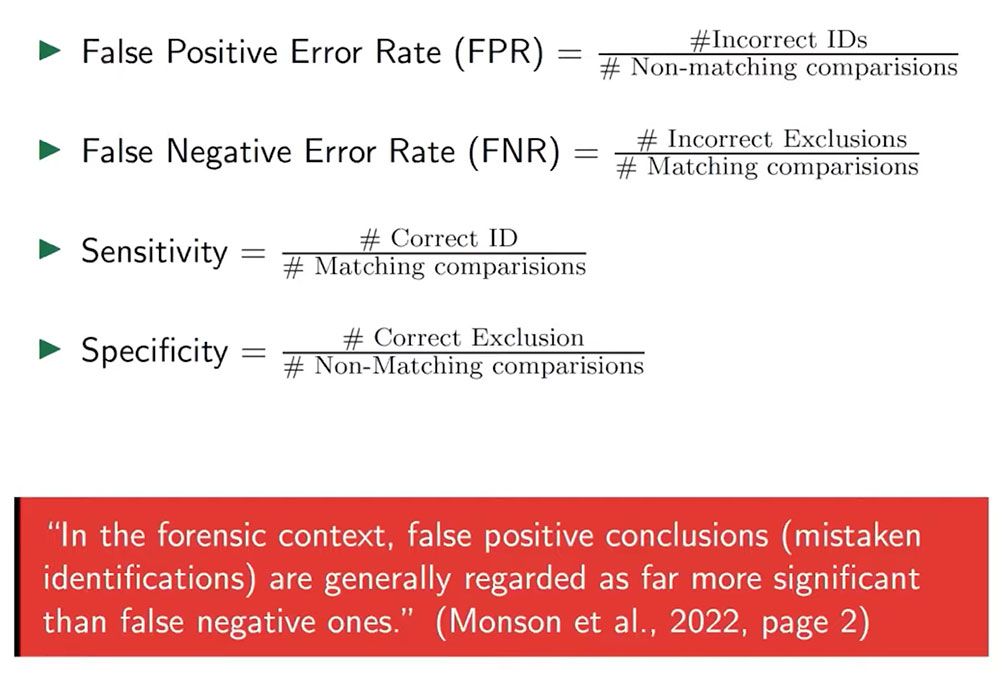

The four common types of measures are False Positive Error Rate (FPR), False Negative Error Rate (FNR), Sensitivity, and Specificity. Inconclusives are generally excluded from Black Box studies as neither an incorrect identification or incorrect exclusions, so inconclusive decisions are not treated as errors.

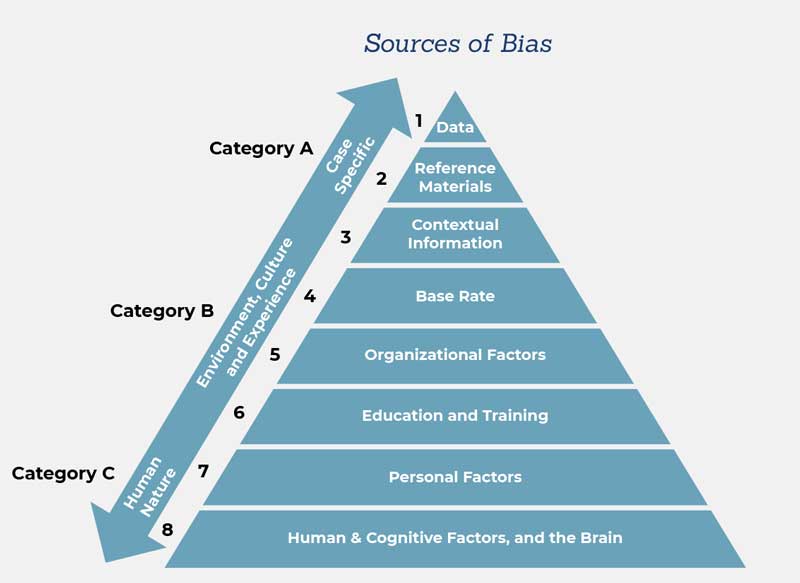

What are some common problems in some existing Black Box studies?

Representative Samples of Examiners

In order for results to reflect real-world scenarios, we need to ensure that the Black Box volunteer participants are representative of the population of interest. In an ideal scenario, volunteers are pulled from a list of persons within the population of interest, though this is not always possible.

All Black Box studies rely on volunteer participation, which can lead to self-selection bias, meaning those who volunteer are different from those who don’t. For example, perhaps those who volunteer are less busy than those who don’t volunteer. Therefore, it’s important that Black Box studies have inclusion criteria to help make the volunteer set as representative of the population of interest as possible.

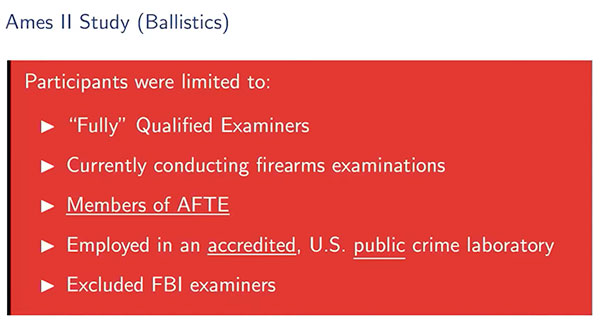

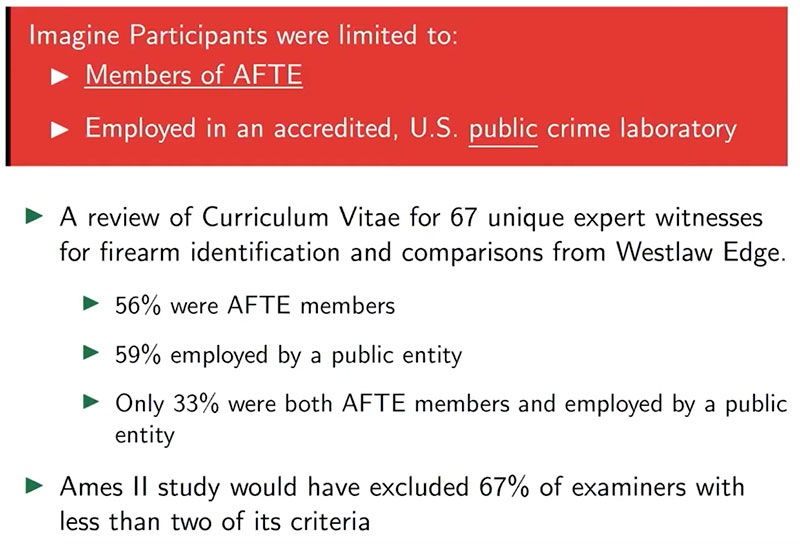

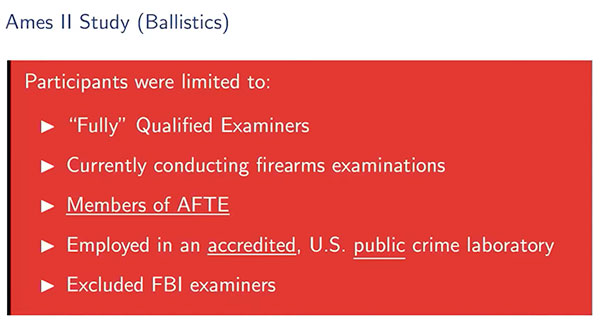

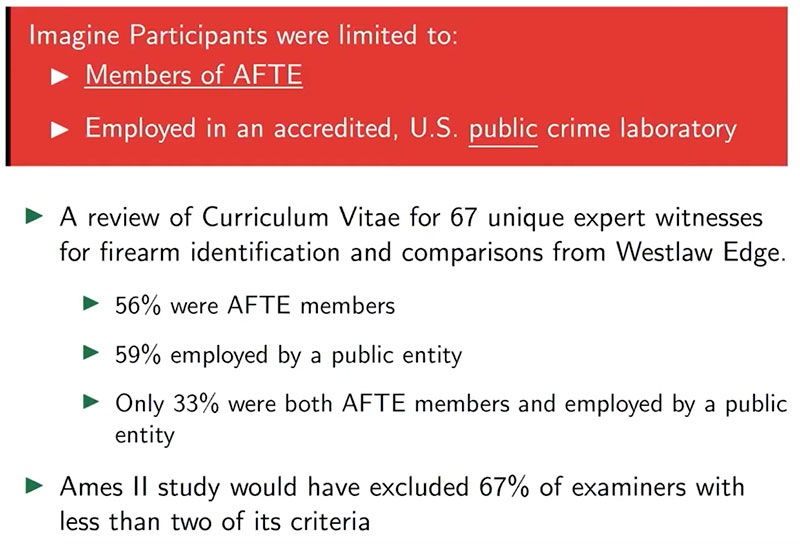

In the Ames II case study, volunteers were solicited through the FBI and the Association of Firearm and Toolmarks (AFTE) contact list. Participants were limited by the following criteria:

Problems with this set:

- Many examiners do not work for an accredited U.S. public crime laboratory.

- Many examiners are not current members of AFTE.

Overall, this is strong evidence in this study that the volunteer set does not match or represent the population of interest, which can negatively influence the accuracy of Black Box study results.

Handling Missing Data

Statistical literature has many rules of thumb stating that it is okay to carry out statistical analyses on the observed data if the missing data accounts for between 5–20% and the missingness is “ignorable”. If missingness is non-ignorable, any amount of missingness can bias estimates. Across most Black Box studies, missing data is between 30–40%. We can adjust for some non-response, but first we must know whether it’s ignorable or non-ignorable.

- Adjusting for missing data depends on the missingness mechanism (potentially at two levels: unit and item).

- Ignorable:

- Missing Completely at Random: the probability that any observation is missing doew not depend on any other variable in the dataset (observed or unobserved)

- Missing at Random: the probability that any observation is missing only depends on other observed variables.

- Non-ignorable

- Not Missing at Random (NMAR): The probability that any observation is missing depends on unobserved values. Also know as non-ignorable.

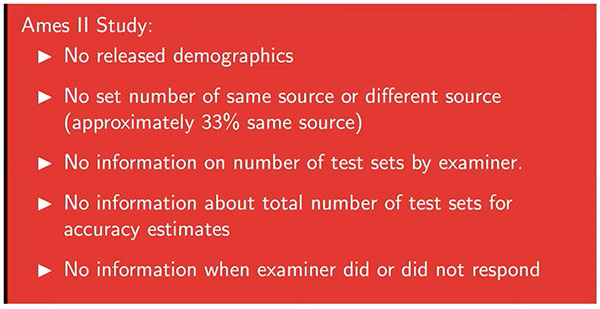

To make this determination, the following data at a minimum must be known:

- The participants who enrolled and did not participate

- The participants who enrolled and did participate

- Demographics for each of these groups of examiners

- The total number of test sets and types assigned to each examiner

- For each examiner, a list of the items he/she did or did not answer

- For each examiner, a list of the items he/she did or did not correctly answer

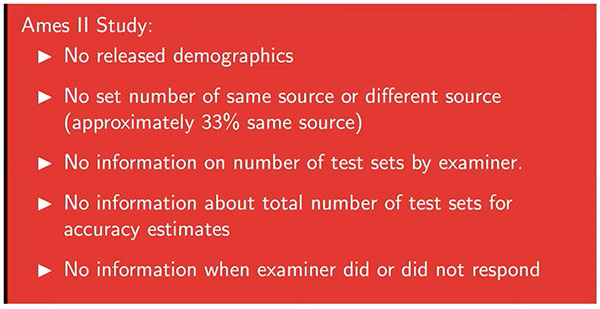

Most Black Box studies do not release this information or the raw data. For example:

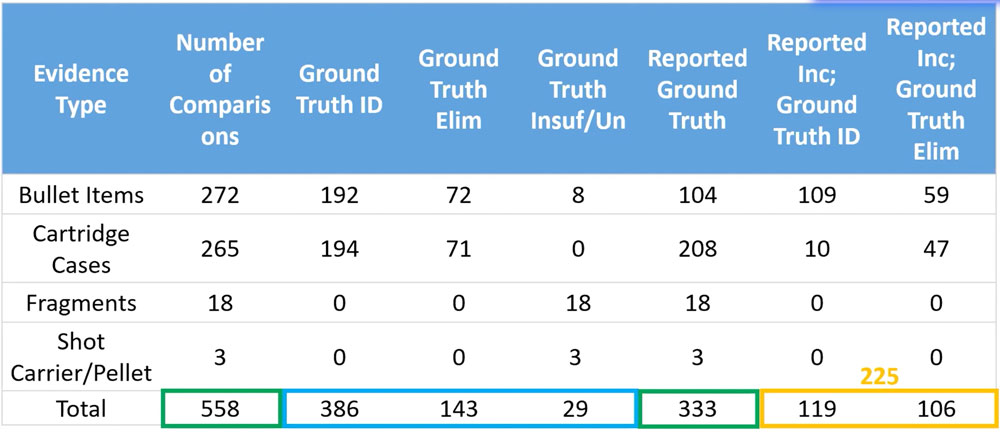

However, study made much of the necessary data known, allowing researchers to study missingness empirically. If there is a characteristic of examiners that is associated with higher error rates, and if that characteristic is also associated with higher levels of missingness, we have evidence that the missingness is non-ignorable and can come up with ways to address it.

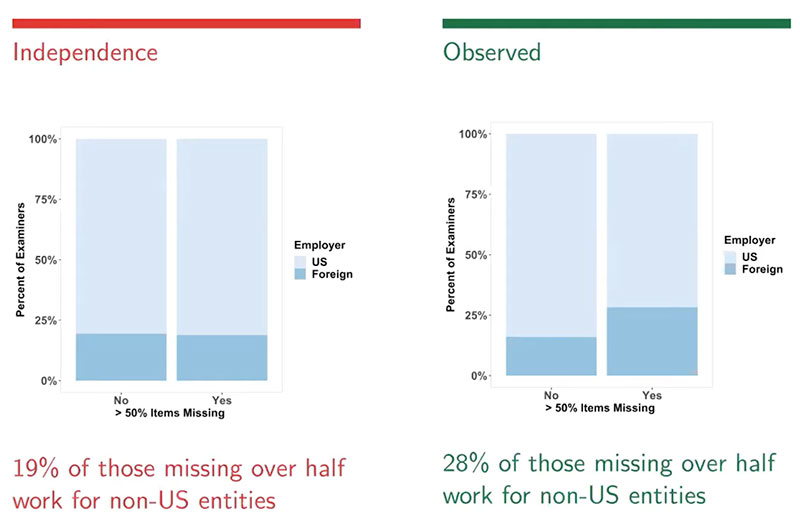

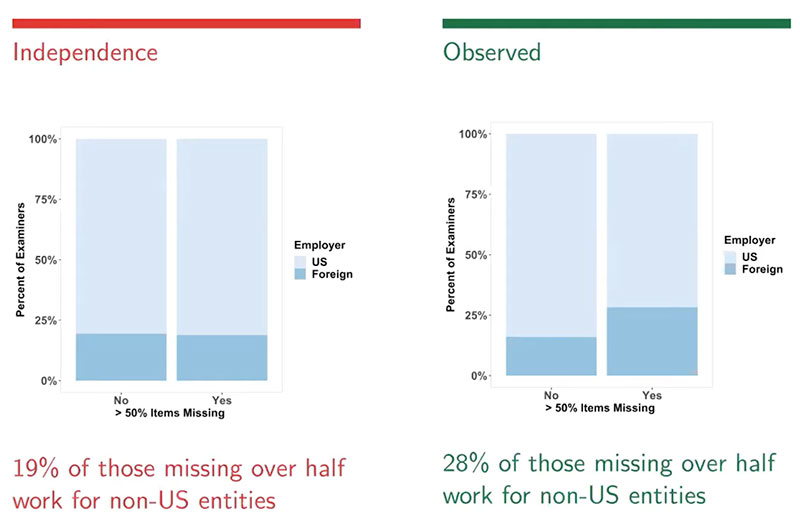

In this example, of the 226 examiners who returned some test sets in the studies, 197 of those also had demographic information. Of those 197, 53 failed to render a decision for over half of the 75 tests presented to them. The EDC study noted that examiners who worked for non-U.S. entities committed 50% of the false positives made in the study, but only accounted for less than 20% of the examiners. Researchers wanted to discover whether examiners who worked for non-U.S. entities had higher rates of missingness. After analyzing the data, researchers found that instead of the 19% of respondents that worked for non-U.S. entities that were expected to have a missingness of over half, the observed amount was 28% of respondents.

Researchers then conducted a hypothesis test to see if there was an association between working for a non-U.S. entity and missingness by taking a random sample size, calculating the proportion of foreign workers in the sample, repeating many times, and comparing the observed value of 28% to the calculated ones.

- H0: Working for a non-US entity is statistically independent of missingness

- HA: Working for a non-US entity is associated with a higher missingness

Using this method, researchers found that the observed result (28%) would occur only 4% of the time, if there was no relationship between missingness and working for a non-U.S. entity, meaning that there is strong evidence that working for a non-U.S. entity is associated with higher missingness.

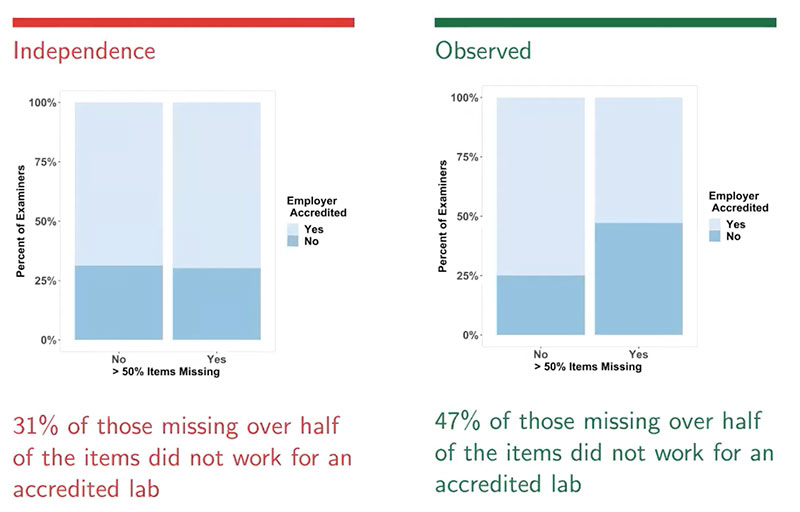

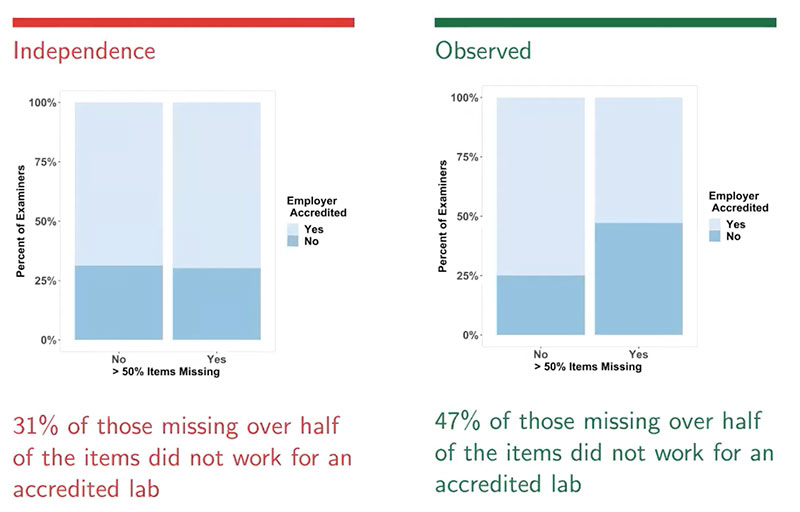

Researchers repeated the process to test whether missingness is higher among examiners who did not work for an accredited lab and had similar findings:

In this case, the hypothesis showed that his result (47% missingness) would only be expected about 0.29% of the time. Therefore, there is strong evidence that working for an unaccredited lab is associated with a higher missingness.

What are the next steps for gaining insights from Black Box studies?

The two issues discussed in this webinar—lack of a representative sample of participants and non-ignorable non-response—can be addressed in the short term with minor funding and cooperation among researchers.

Representation

- Draw a random sample of courts (state, federal, nationwide, etc.)

- Enumerate experts in each

- Stratify and sample experts

- Even if the person refuses to participate, at least we know in which ways (education, gender, age, etc.) the participants are or are not representative of the population of interest.

Missingness

- This is producing the biggest biases in the studies that have been published.

- Adjusting for non-response is necessary for the future of Black Box studies.

- Results can be adjusted if those who conduct the studies release more data and increase transparency to aid collaboration.

Longer term solutions include:

- Limiting who qualifies as an “expert” when testifying in court (existing parameters require minimal little to no certification, education, or testing)

- Institutionalized, regular discipline-wide testing with expectations of participation.

- Requirements to share data from Black Box studies in more granular form.