A new study that proposes a broad and versatile approach to strengthening expert decision making will be the focus of an upcoming Center for Statistics and Applications in Forensic Evidence (CSAFE) webinar.

The webinar, Improving Forensic Decision Making: A Human-Cognitive Perspective, will be held Thursday, Feb. 17 from 12–1 p.m. CST. It is free and open to the public.

During the webinar, Itiel Dror, a cognitive neuroscience researcher from the University College London, will discuss his journal article, Linear Sequential Unmasking–Expanded (LSU-E): A general approach for improving decision making as well as minimizing noise and bias. The article was published in Forensic Science International: Synergy and co-authored by Jeff Kukucka, associate professor of psychology at Towson University.

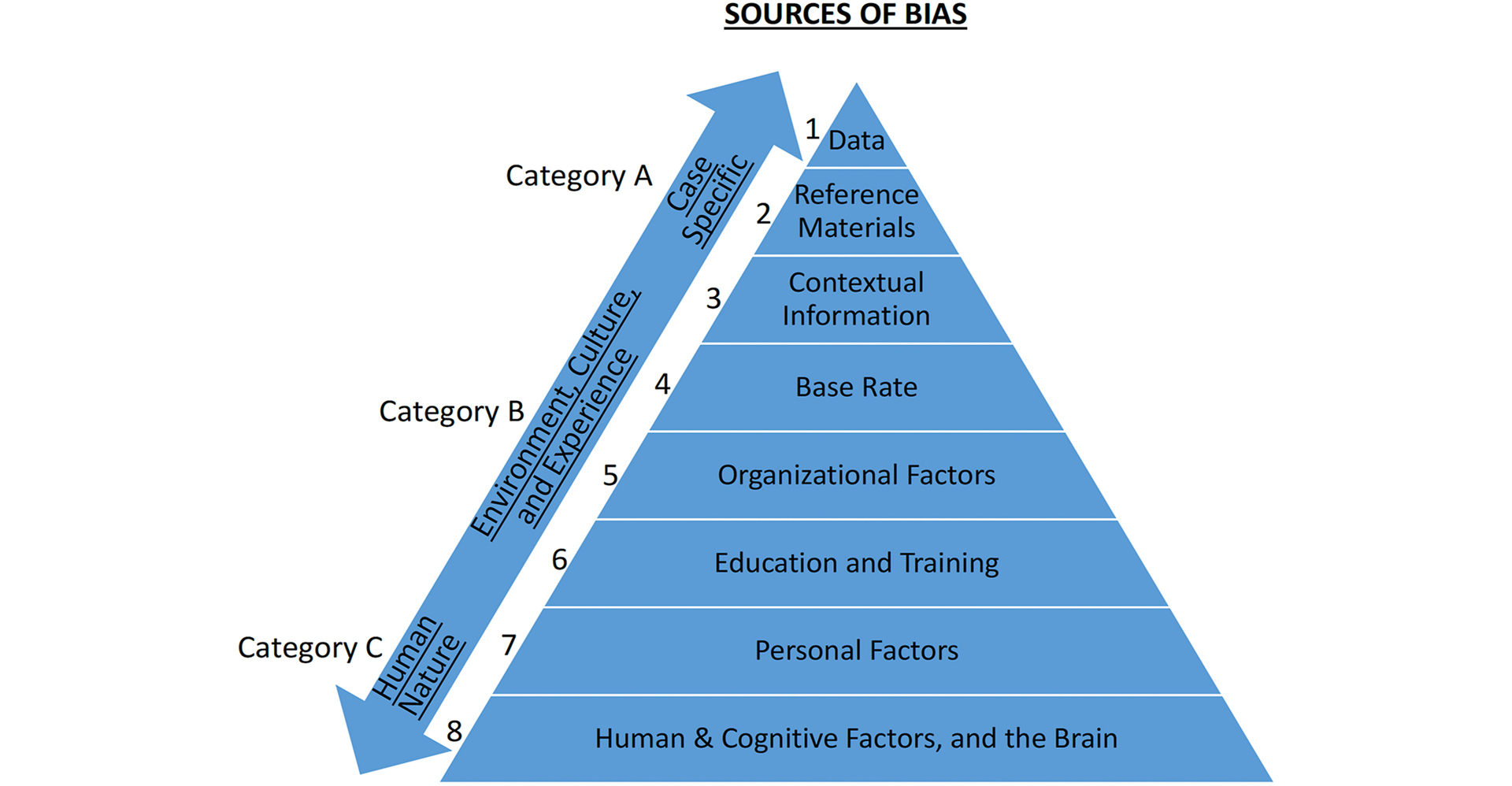

In the article, the authors introduce Linear Sequential Unmasking (LSU-E), an approach that can be applied to all forensic decisions, and also reduces noise and improves decisions “by cognitively optimizing the sequence of information in a way that maximizes information utility and thereby produces better and more reliable decisions.”

From the Abstract:

In this paper, we draw upon classic cognitive and psychological research on factors that influence and underpin expert decision making to propose a broad and versatile approach to strengthening expert decision making. Experts from all domains should first form an initial impression based solely on the raw data/evidence, devoid of any reference material or context, even if relevant. Only thereafter can they consider what other information they should receive and in what order based on its objectivity, relevance, and biasing power. It is furthermore essential to transparently document the impact and role of the various pieces of information on the decision making process. As a result of using LSU-E, decisions will not only be more transparent and less noisy, but it will also make sure that the contributions of different pieces of information are justified by, and proportional to, their strength.

To register for the February webinar, visit https://forensicstats.org/events/.

The CSAFE Spring 2022 Webinar Series is sponsored by the National Institute of Standards and Technology (NIST) through cooperative agreement 70NANB20H019.