Guest Blog

Kori Khan

Assistant Professor

Department of Statistics, Iowa State University

We are currently in an era where machine learning and algorithms offer novel approaches to solving problems both new and old. Algorithmic approaches are swiftly being adopted for a range of issues: from making hiring decisions for private companies to sentencing criminal defendants. At the same time, researchers and legislators are struggling with how to evaluate and regulate such approaches.

The regulation of algorithmic output becomes simultaneously more complex and pressing in the context of the American criminal justice system. U.S. courts are regularly admitting evidence generated from algorithms in criminal cases. This is perhaps unsurprising given the permissive standards for admission of evidence in American criminal trials. Once admitted, however, the algorithms used to generate the evidence—which are often proprietary or designed for litigation—present a unique challenge. Attorneys and judges face questions about how to evaluate algorithmic output when a person’s liberty hangs in the balance. Devising answers to these questions inevitably involves delving into an increasingly contentious issue—access to the source code.

In criminal courts across the country, it appears most criminal defendants have been denied access to the source code of algorithms used to produce evidence against them. I write, “it appears,” because here, like in most areas of the law, empirical research into legal trends is limited to case studies or observations about cases that have drawn media attention. For these cases, the reasons for denying a criminal defendant access to the source code have not been consistent. Some decisions have pointed out that the prosecution does not own the source code, and therefore is not required to produce it. Others implicitly acknowledge that the prosecution could be required to produce the source code and instead find that the defendant has not shown a need for access to the source code. It is worth emphasizing that these decisions have not found that the defendant does not need access to source code; but rather, that the defendant has failed to sufficiently establish that need. The underlying message in many of these decisions, whether implicit or explicit, is that there will be cases, perhaps quite similar to the case being considered, where a defendant will require access to source code to mount an effective defense. The question of how to handle access to the code in such cases does not have a clear answer.

Legal scholars are scrambling to provide guidance. Loosely speaking, proposals can be categorized into two groups: those that rely on existing legal frameworks and those that suggest a new framework might be necessary. For the former category, the heart of the issue is the tension between the intellectual property rights of the algorithm’s producer and the defendant’s constitutional rights. On the one hand, the producers of algorithms often have a commercial interest in ensuring that competitors do not have access to the source code. On the other hand, criminal defendants have the right to question the weight of the evidence presented in court.

There is a range of opinions on how to balance these competing interests. These opinions run along a spectrum of always allowing defendants access to source code to rarely allowing defendants access to the code. However, most fall somewhere in the middle. Some have suggested “front-end” measures in which lawmakers establish protocols to ensure the accuracy of algorithmic output before their use in criminal courts. These measures might include an escrowing of the source code, similar to how some states have handled voting technology. Within the courtroom, suggestions for protecting the producers of code include utilizing traditional measures, such as the protective orders commonly used in trade secret suits. Other scholars have proposed a defendant might not always need access to source code. For example, some suggest that if the producer of the algorithm is willing to run tests constructed by the defense team, this may be sufficient in many cases. Most of these suggestions make two key assumptions: 1) either legislators or defense attorneys should be able to devise standards to identify the cases for which access to source code is necessary to evaluate an algorithm and 2) legislators or defense attorneys can devise these standards without access to the source code themselves.

These assumptions require legislators and defense attorneys to answer questions that the scientific community itself cannot answer. Outside of the legal setting, researchers are faced with a similar problem: how can we evaluate scientific findings that rely on computational research? For the scientific community, the answer for the moment is that we are not sure. There is evidence that the traditional methods of peer review are inadequate. In response, academic journals and institutes have begun to require that researchers share their source code and any relevant data. This is increasingly viewed as a minimal standard to begin to evaluate computational research, including algorithmic approaches. However, just as within the legal community, the scientific community has no clear answers for how to handle privacy or proprietary interests in the evaluation process.

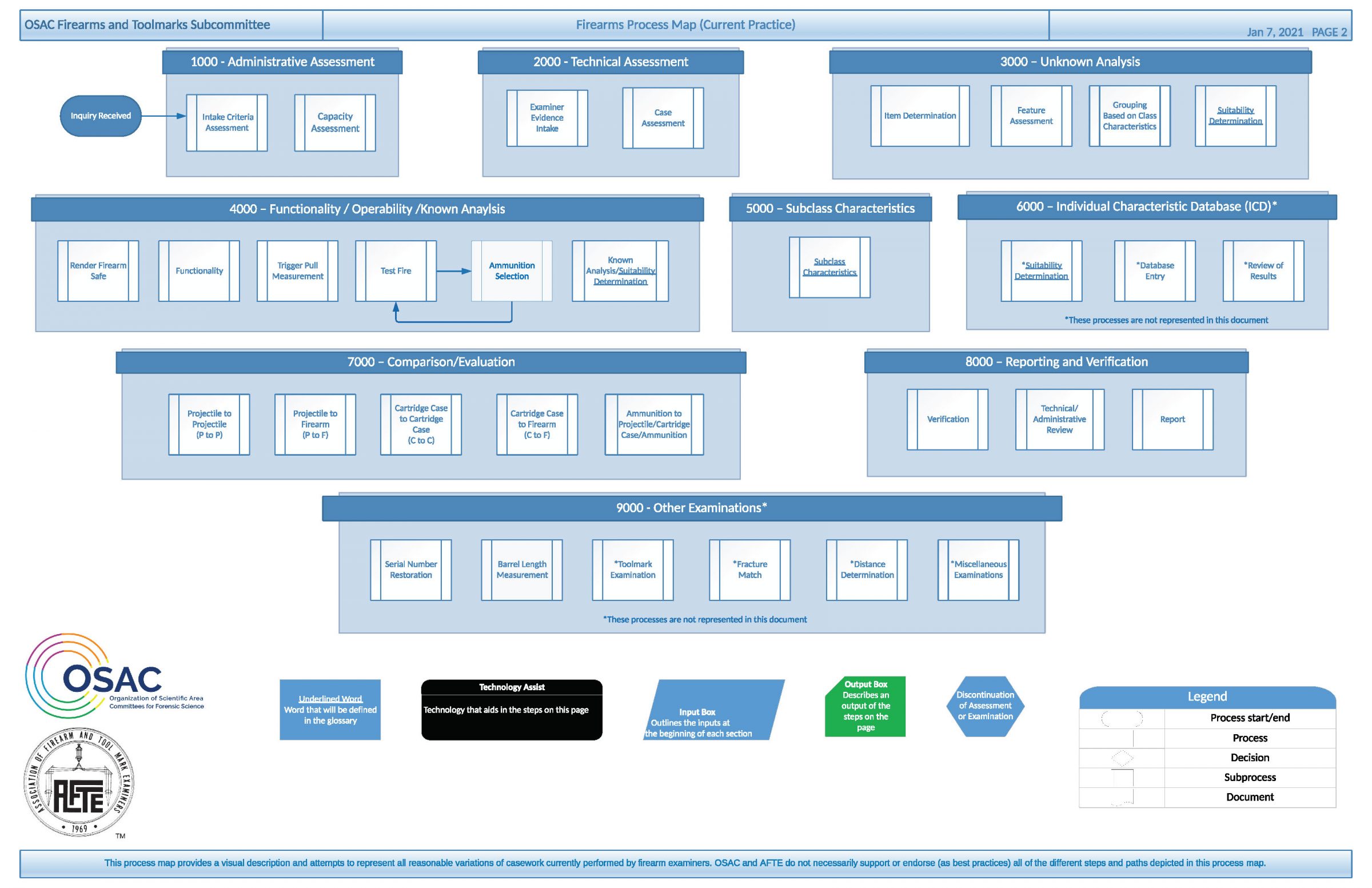

In the past, forensic science methods used in criminal trials have largely been developed and evaluated outside the purview of the larger scientific community, often on a case-by-case basis. As both the legal and scientific communities face the challenge of regulating algorithms, there is an opportunity to expand existing interdisciplinary forums and create new ones.

Learn about source code in criminal trials by attending the Source Code on Trial Symposium on March 12 at 2:30 to 4 p.m. Register at https://forensicstats.org/source-code-on-trial-symposium/.

Publications and Websites Used in This Blog:

How AI Can Remove Bias From The Hiring Process And Promote Diversity And Inclusion

Equivant, Northpoint Suite Risk Need Assessments

The Case for Open Computer Programs

Using AI to Make Hiring Decisions? Prepare for EEOC Scrutiny

Source Code, Wikipedia

The People of the State of New York Against Donsha Carter, Defendant

Commonwealth of Pennsylvania Versus Jake Knight, Appellant

The New Forensics: Criminal Justice, False Certainty, and the Second Generation of Scientific Evidence

Convicted by Code

Machine Testimony

Elections Code, California Legislative Information

Trade Secret Policy, United States Patent and Trademark Office

Computer Source Code: A Source of the Growing Controversy Over the Reliability of Automated Forensic Techniques

Artificial Intelligence Faces Reproducibility Crisis

Author Guidelines, Journal of the American Statistical Association

Reproducible Research in Computational Science