INSIGHT

Error Rates, Likelhood Ratios, and Jury Evaluation of Forensic Evidence

OVERVIEW

Forensic examiner testimony regularly plays a role in criminal cases — yet little is known about the weight of testimony on jurors’ judgment.

Researchers set out to learn more: What impact does testimony that is further qualified by error rates and likelihood ratios have on jurors’ conclusions concerning fingerprint comparison evidence and a novel technique involving voice comparison evidence?

Lead Researchers

Brandon L. Garrett J.D.

William E. Crozier, Ph.D.

Rebecca Grady, Ph.D.

Journal

Journal of Forensic Sciences

Publication Date

22 April 2020

Publication Number

IN 106 IMPL

THE HYPOTHESIS

Participants would place less weight on voice comparison testimony than they would on fingerprint testimony, due to cultural familiarity and perceptions.

Participants who heard error rate information would put less weight on forensic evidence — voting guilty less often — than participants who heard traditional and generic instructions lacking error rates.

Participants who heard likelihood ratios would place less weight on forensic expert testimony compared to testimony offering an unequivocal and categorical conclusion of an ID or match.

APPROACH AND METHODOLOGY

WHO

900 participants read a mock trial about a convenience store robbery with 1 link between defendant and the crime

WHAT

2 (Evidence: Fingerprint vs. Voice Comparison)

x 2 (Identification: Categorical or Likelihood Ratio)

x 2 (Instructions: Generic vs. Error Rate) design

HOW

Participants were randomly assigned to 1 of the 8 different conditions

After reading materials + jury instructions, participants decided whether they would vote “beyond-a-reasonable-doubt” that the defendant was guilty

KEY TAKEAWAYS FOR PRACTITIONERS

Laypeople gave more weight to fingerprint evidence than voice comparison evidence.

Fewer guilty verdicts arose from voice evidence — novel forensic evidence methods might not provide powerful evidence of guilt.

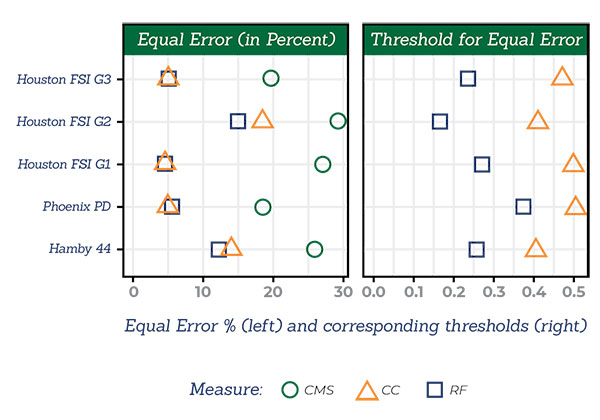

Fingerprint evidence reliability decreases when jurors learn about error rates.

Error rate information appears particularly important for types of forensic evidence that people may already assume as highly reliable.

Participants considering fingerprint evidence were more likely to find the defendant not guilty when provided instruction on error rates. When the fingerprint expert offered a likelihood ratio, the error rate instructions did not decrease guilty verdicts.

When asked to rate which is worse — wrongly convicting an innocent person or failing to convict a guilty person or both — the study found the majority of participants were concerned with convicting an innocent person.

Participants who believe convicting an innocent person was the worst offense were less likely to vote guilty due to more doubt in the evidence.

Those who had greater concern for releasing a guilty person were more likely to vote guilty.

of participants believed the errors were equally bad.

Researchers found, overall, that presenting an error rate moderated the weight of evidence only when paired with a fingerprint identification.

FOCUS ON THE FUTURE

To produce better judicial outcomes when juries are formed with laypeople:

Direct efforts toward offering more explicit judicial instructions.

Craft a better explanation of evidence limitations.

Consider the findings when developing new forensic techniques –– new techniques aren’t as trusted by a jury despite proving more reliable and lowering error rates.

Pay attention to juror preconceptions about the reliability of evidence.